In the ever-evolving landscape of artificial intelligence, one breakthrough has revolutionized natural language

processing (NLP) like never before: the Transformer architecture. This innovative framework has become the

backbone of state-of-the-art for Large Language Model (LLM) development, propelling advancements in various

fields from machine translation to code generation. In this comprehensive exploration, we embark on a journey to

understand how the Transformer architecture is harnessed to train Language Models, the intricacies of weight

adjustments in word embedding, how organizations fine-tune these models for their specific needs, and finally,

we unveil the champion LLM that holds the promise of revolutionizing code generation for the masses.

1. Unleashing the Power of Transformers in LLM Training:

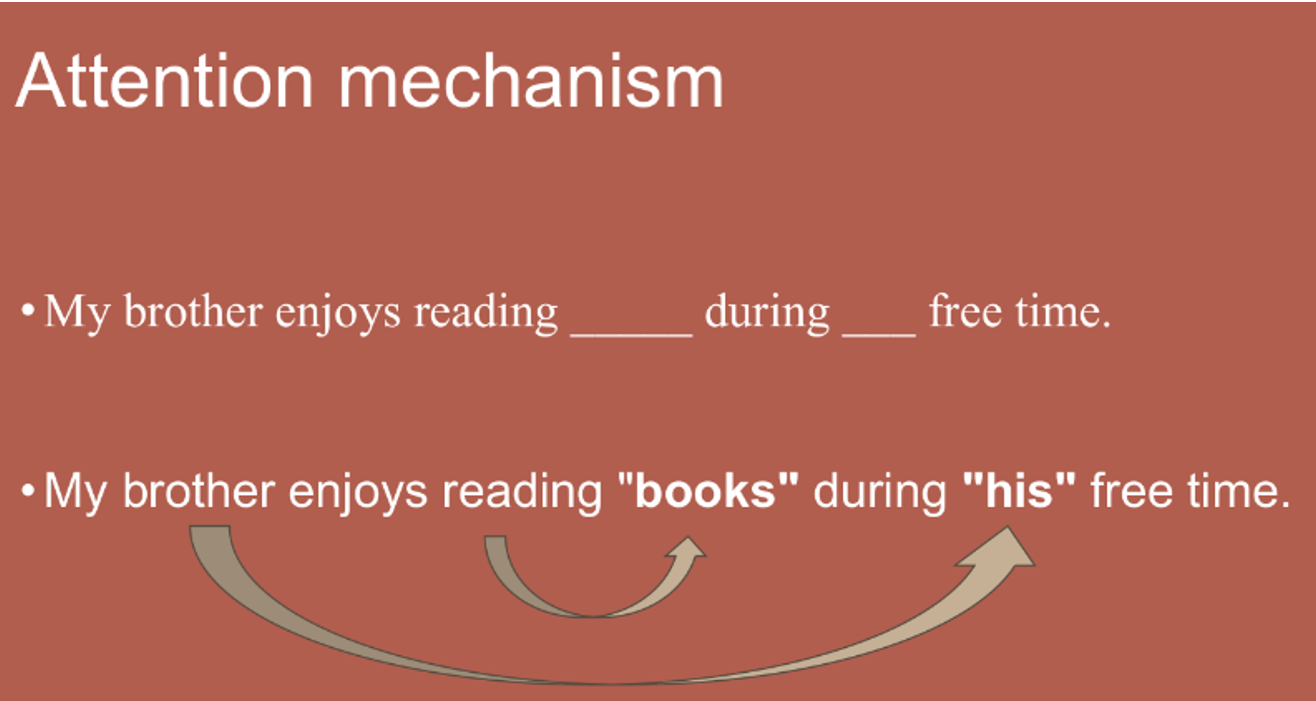

The Transformer architecture was introduced by Vaswani et al. in the seminal paper “Attention Is All You Need,”

redefined the landscape of NLP by leveraging the self-attention mechanism. Unlike its predecessors, which relied

heavily on recurrent or convolutional neural networks, Transformers use something called “Attention

Mechanism” and this provides encoding that provides the context and weight around each word and creates a

vector representation out of it. This helps LLM to understand the paragraph accurately rather than understanding

each word individually.

Central to the Transformer architecture is the self-attention mechanism, allowing each word to attend to all

other words in the input sequence, enabling contextual understanding and capturing dependencies efficiently.

This mechanism forms the core of both encoder and decoder components in the architecture, facilitating

bidirectional processing and empowering models to generate coherent and contextually rich outputs.

2. Weight Adjustment in Word Embedding:

Word embedding, a fundamental aspect of LLM training, involves mapping words from a discrete vocabulary to

continuous vector representations in a high-dimensional space. During training, the weights in the embedding

layer are adjusted based on various parameters such as contextual relevance, semantic similarity, and syntactic

structure.

The process of weight adjustment in word embedding is guided by optimization algorithms like stochastic gradient

descent (SGD) or its variants, which aim to minimize the discrepancy between predicted and actual outputs.

Through iterative updates, the embedding weights are fine-tuned to capture nuanced relationships between words,

enhancing the model’s ability to comprehend and generate human-like text.

Ex. : “The sky is …..”

Ouput1: “The sky is bug.”

Output 2: “The sky is black.”

Final Output : “The sky is blue.”

3. Fine-Tuning LLMs for Organizational Needs:

Different organizations leverage LLM fine-tuning to tailor models to their specific requirements and domains.

This process involves retraining pre-trained LLM on domain-specific datasets, adjusting model parameters, and

fine-tuning the weights to optimize performance for specialized tasks.

For instance, in healthcare, organizations fine-tune LLMs to analyze medical records, extract valuable insights,

and assist in diagnosis and treatment recommendations. Similarly, in finance, LLMs are customized to understand

and generate reports, analyze market trends, and assist in decision-making processes.

4. The Champion LLM for Code Generation:

DevinAI, a groundbreaking AI software engineer developed by Cognition Labs, showcases the transformative power of

Large Language Models (LLMs) in software development. Its autonomous capabilities redefine productivity by

automating coding, debugging, and deployment tasks, bridging skill gaps among developers. As an end-to-end

solution, DevinAI offers streamlined workflows and enhanced efficiency, empowering developers to focus on

innovation. Its evolution over time promises even greater capabilities, revolutionizing software engineering

practices.

Along with DevinAI, GPT’s ability to understand and generate code stems from its deep contextual understanding,

learned through extensive exposure to programming-related text. By fine-tuning GPT on programming datasets and

specific task objectives, organizations can harness its prowess to automate code generation, streamline software

development workflows, and empower developers with intelligent coding assistance.

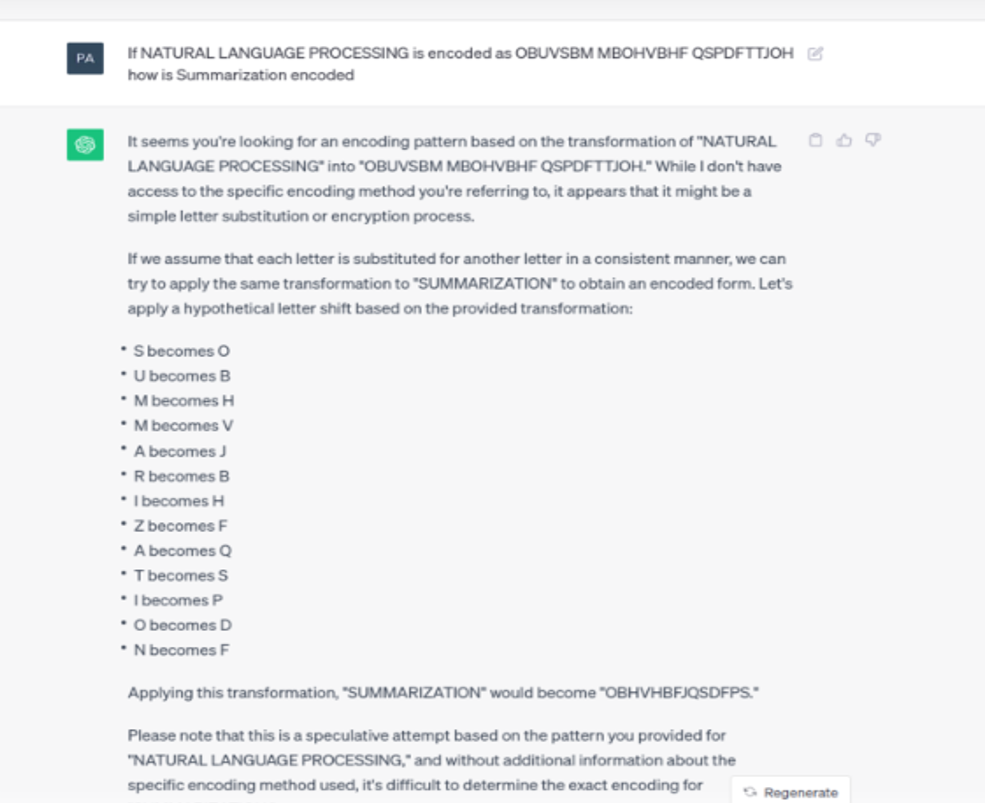

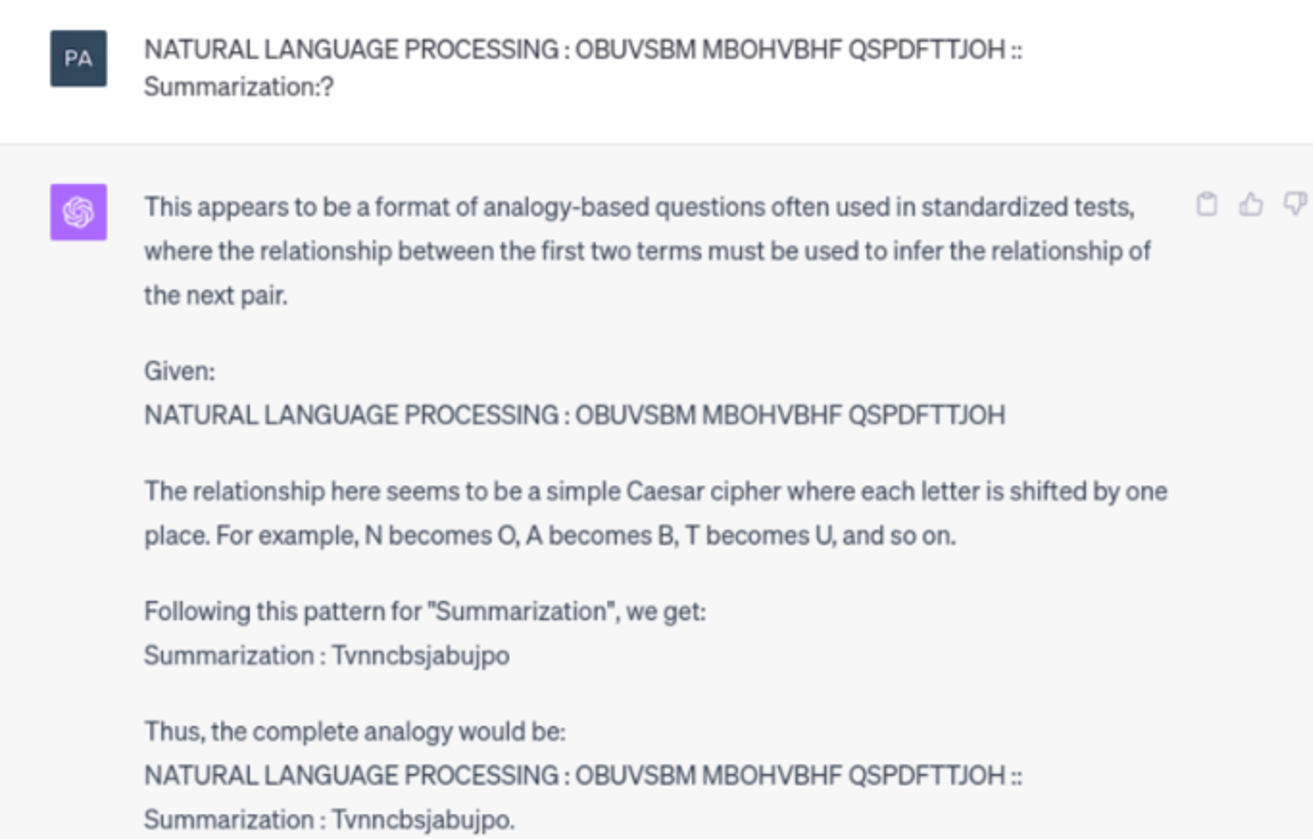

What does GPT-3 Know?

After giving hints :

What does GPT-4 know?

Above is the example of Few and Zero Shot Learning :

Zero Shot: Performs tasks for which LLM is not trained explicitly.

Few Shot : Performs tasks for which LLM is not trained explicitly by looking at handful of examples.

Disadvantages of LLM’s :

Hallucinations:

Irrelevant but confident answers

When LLMs don’t know about the concept they tend to make up things.

- Jailbreaking Prompts:

Current safeguards in LLMs can be bypassed with careful prompting:

Conclusion:

The landscape of Language Models is vast and continually evolving, with various contenders vying for supremacy in

different domains. While OpenAI’s GPT series has garnered significant attention and acclaim for its versatility

and performance, other players like GitHub CoPilot and Devin AI showcase the diverse applications and potentials

of LLMs beyond traditional NLP tasks.

Each organization’s choice of LLM depends on specific use cases, requirements, and domain expertise. While GPT

models excel in generating human-like text and understanding natural language, specialized LLMs like CoPilot and

those fine-tuned by organizations like Devin AI cater to niche domains and offer tailored solutions to address

unique challenges.

Ultimately, the quest for the best LLM is subjective and depends on the context of its application. As

advancements in AI continue to unfold, we can expect further innovation and refinement in LLM technologies,

empowering organizations across industries to harness the power of language for transformative outcomes.

The landscape of Language Models is vast and continually evolving, with various contenders vying for supremacy in

different domains. While OpenAI’s GPT series has garnered significant attention and acclaim for its versatility

and performance, other players like GitHub CoPilot and Devin AI showcase the diverse applications and potentials

of LLMs beyond traditional NLP tasks.

Each organization’s choice of LLM depends on specific use cases, requirements, and domain expertise. While GPT

models excel in generating human-like text and understanding natural language, specialized LLMs like CoPilot and

those fine-tuned by organizations like Devin AI cater to niche domains and offer tailored solutions to address

unique challenges.

Ultimately, the quest for the best LLM is subjective and depends on the context of its application. As

advancements in AI continue to unfold, we can expect further innovation and refinement in LLM technologies,

empowering organizations across industries to harness the power of language for transformative outcomes.The

landscape of Language Models is vast and continually evolving, with various contenders vying for supremacy in

different domains. While OpenAI’s GPT series has garnered significant attention and acclaim for its versatility

and performance, other players like GitHub CoPilot and Devin AI showcase the diverse applications and potentials

of LLMs beyond traditional NLP tasks.

Each organization’s choice of LLM depends on specific use cases, requirements, and domain expertise. While GPT

models excel in generating human-like text and understanding natural language, specialized LLMs like CoPilot and

those fine-tuned by organizations like Devin AI cater to niche domains and offer tailored solutions to address

unique challenges.

Ultimately, the quest for the best LLM is subjective and depends on the context of its application. As

advancements in AI continue to unfold, we can expect further innovation and refinement in LLM technologies,

empowering organizations across industries to harness the power of language for transformative outcomes.

Post a comment Cancel reply

Related Posts

Passkeys: The Future of Passwordless Authentication

The days of memorizing complex passwords may soon be behind us. With the growing need…

The Role of APIs in Accelerating Innovation

In today’s rapidly evolving digital world, businesses must innovate quickly to stay ahead. One of…

Generative AI in Oracle Databases

Oracle has integrated generative AI capabilities into its database ecosystem, particularly with Oracle Database 23ai…

The Pivotal Role of UI/UX in Software Development and the AI Advantage

In today's fast-paced digital world, software development has transcended mere functionality. A well-designed software application…